Why your AI strategy is only as strong as its weakest, unseen link.

🔍 Introduction: AI’s New Weak Spot — The Supply Chain

Artificial Intelligence is transforming business strategy, decision-making, and productivity across every industry. But behind the scenes of every impressive model is an intricate ecosystem of data, models, code, infrastructure, and human input — the AI supply chain.

As cybercriminals shift their focus from directly breaching firewalls to tampering with the components that power intelligent systems, a new risk frontier emerges. If we can’t trust the data, models, and tools we use to build AI, then we can’t trust the AI itself.

That’s the AI supply chain problem — and it’s happening right now.

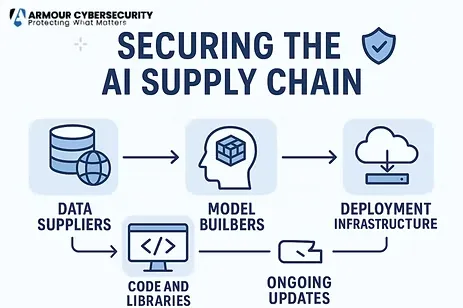

🧩 What Is the AI Supply Chain?

The AI supply chain refers to all the external components that feed into your machine learning systems. Think of it as the AI equivalent of third-party suppliers in manufacturing.

It includes:

- Data Suppliers – Public datasets, web scraping, purchased datasets

- Model Builders – External pretrained models (e.g., Hugging Face, GitHub)

- Code and Libraries – Open-source ML frameworks like TensorFlow, PyTorch

- Deployment Infrastructure – Cloud APIs, edge devices, model hosting

- Ongoing Updates – Fine-tuning, retraining, patching

Each link introduces opportunity — and hidden vulnerability.

⚠️ Real-World Threats in the AI Supply Chain

🧪 1. Data Poisoning

Attackers inject incorrect or manipulative samples into training data, skewing the model’s behavior.

Case Study: In a 2022 Cornell Tech study, researchers showed how inserting a few hundred poisoned images into a dataset could trick computer vision models into misidentifying street signs — a major risk for autonomous vehicles.Source: Cornell Tech AI Poisoning Study

🎯 2. Backdoored Pretrained Models

Publicly available models may include hidden malicious logic that only activates under certain prompts.

Case Study: A 2023 MIT CSAIL audit found that 2.1% of shared open-source AI models contained unauthorized callbacks or suspicious behavior triggers.Source: MIT CSAIL “Model Supply Chain Risk”

📦 3. Malicious Package Dependencies

Cybercriminals compromise open-source packages commonly used in ML environments.

Case Study: In 2021, the Python packages ctx and PHPass (with millions of installs) were hijacked to exfiltrate AWS credentials.Source: Sonatype Software Supply Chain Report

🔗 4. Risky Third-Party APIs

Businesses that rely on AI-as-a-service APIs (e.g., sentiment analysis or fraud detection) are exposed if the API is tampered with or misrepresents results.

Case Study: In 2022, a U.S. fintech startup relied on a third-party lending AI API that introduced racial bias through opaque training data.Source: CNBC FinTech AI Bias Feature

📊 The Stats Behind the Risk

- 78% of developers use pretrained models from third parties without verifying provenance.

→ Source: Gartner AI Trends 2024 - 43% of organizations don’t track which datasets or code libraries power their AI.

→ Source: IBM AI Breach Cost Report, 2024 - $46 billion will be spent globally on AI security by 2027.

→ Source: MarketsandMarkets AI Security Forecast

🛡️ How Smart Organizations Secure the Chain

✅ 1. Build an AI Bill of Materials (AI-BOM)

Track everything that goes into your model: datasets, code versions, dependencies, and source authorship.

✅ 2. Enforce Data Validation

Scan and cleanse training data. Avoid scraping unknown sites. Use trusted and labelled datasets where possible.

✅ 3. Monitor AI Behavior

Conduct red-team testing. Simulate adversarial attacks and prompt injection. Use behavior audits on production models.

✅ 4. Secure Model Sources

Require cryptographic signing or hash verification of pretrained models. Only use models from verified registries.

✅ 5. Establish AI Use Policies

Train staff not to upload sensitive data into public LLMs like ChatGPT. Provide internal alternatives and define clear risk thresholds for external tools.

🧠 Why It Matters: It’s About Trust, Not Just Tech

AI is increasingly making decisions that affect people’s lives: approving loans, flagging medical diagnoses, driving vehicles, and more. If attackers compromise the inputs — the AI will output wrong, dangerous, or biased results.

And regulators are catching on. The EU AI Act and NIST AI Risk Management Framework are setting global precedents that will soon mandate traceability, transparency, and integrity in AI systems.

🧭 Conclusion: Secure the Brain, Not Just the Body

As AI becomes the “brain” of modern business, it must be trusted — and that trust must be earned.

Securing your AI supply chain means protecting against silent sabotage, maintaining compliance, and defending your reputation. It’s no longer a “nice to have” — it’s a core requirement for any company building AI that matters.

So the next time someone says their AI is smart, ask: “Do you know what went into it?”

Q&A:

Q: How do emerging AI standards influence supply chain risk management?

A: Emerging standards provide guidance on model testing, explainability, and auditing, which can help organizations identify risks early. However, inconsistent adoption across vendors may leave gaps that attackers could exploit.

Q: How can small businesses participate in securing AI systems without large budgets?

A: Smaller organizations can focus on risk assessment, prioritizing critical assets, using vetted open-source tools, and collaborating with trusted partners or managed security providers to reduce supply chain threats affordably.

Q: How might AI supply chain risks differ between industries like healthcare, finance, and gaming?

A: Different industries face unique risks. For example, healthcare AI must protect patient data, finance AI must prevent fraud, and gaming AI must safeguard intellectual property and in-game economies. Each sector requires tailored supply chain security measures.