Shadow AI & the New Age of Accidental Breach

Not every data breach starts with malware. Some begin with good intentions: a project manager using an AI tool to summarize meeting notes, a marketer drafting emails with a chatbot, or a developer optimizing code with a cloud-based assistant. No security tools are bypassed. No malicious actors are involved. Yet somehow, sensitive data slips out.

Welcome to the world of Shadow AI – where risk doesn’t break in. It leaks out from within.

This is the reality of Shadow AI: unsanctioned, unmanaged artificial intelligence tools being used inside organizations without visibility or control. As AI adoption accelerates, so too does the risk of accidental data exposure — not through malicious actors, but through trusted employees using tools that fall outside IT’s line of sight.

In 2025, the breach isn’t breaking in.

It’s leaking out.

The Quiet Rise of Shadow AI

In today’s enterprise landscape, AI adoption has outpaced governance. Employees are using AI tools in increasing numbers, often with little to no oversight, to boost productivity, automate tasks, or experiment. From ChatGPT and Gemini to specialized tools like Jasper, Copy.ai, GitHub Copilot, and hundreds more, the ecosystem of generative AI is exploding.

While ChatGPT often takes center stage in media conversations, it’s just one of many. The average organization today faces exposure through a wide range of unvetted AI tools, often introduced by well-meaning staff trying to work smarter — not realizing they might be creating compliance or legal risks along the way.

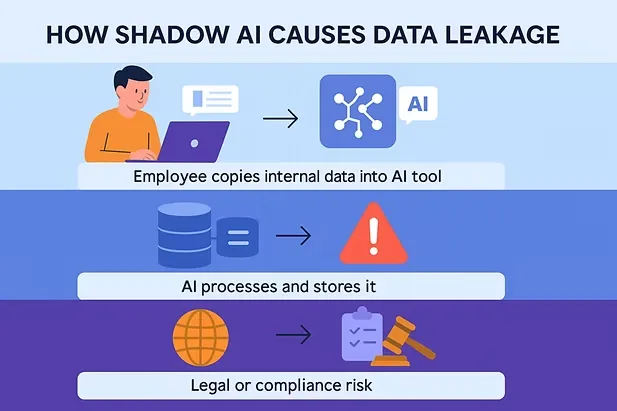

The Real Risk Isn’t the Tool — It’s the Data

Many AI tools don’t need to “access” your environment to cause harm. They simply need your employees to input sensitive data, and that’s where the problem starts.

- Financial statements shared for “analysis”

- Source code pasted into a code generator

- Confidential customer data used to create summaries or reports

These inputs often leave your security perimeter and are stored, processed, or learned from by external platforms, sometimes without encryption or contractual safeguards. In these cases, data itself becomes the breach vector.

That’s why data governance is the first layer of protection in any AI-aware security strategy. Without clear boundaries around what can and cannot be entered into these tools, exposure is inevitable, even without a single alert going off.

The Absence of Alerts — and Accountability

Unlike malware, Shadow AI doesn’t trip alarms or create obvious indicators of compromise. There’s no unauthorized login, no firewall bypass — just a user pasting a client contract into a chatbot to “make it sound more professional.” The simplicity makes it even more dangerous.

Worse still, these breaches are challenging to detect retroactively. Logs are sparse. No SIEM alerts. And unless your organization is specifically auditing browser extensions, API activity, or clipboard data, you may not even know it happened.

AI Governance Is No Longer Optional

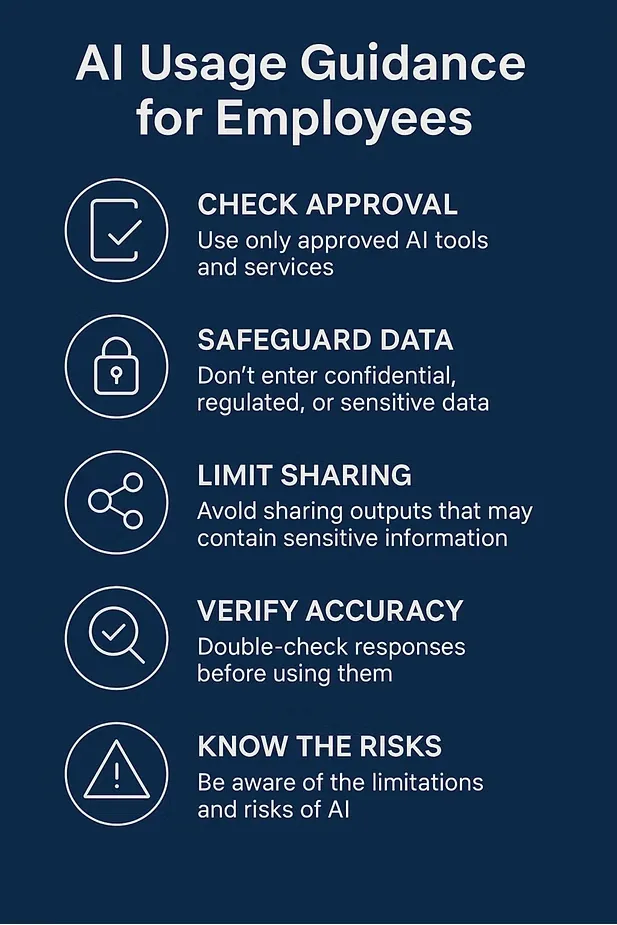

The key to controlling Shadow AI isn’t banning tools outright — it’s building AI governance frameworks that are enforceable, scalable, and culturally embedded.

This includes:

- A vetted list of approved tools

- Policies on what types of data can be shared

- Role-based restrictions and logging

- Mandatory training on acceptable use

- Legal review of vendor terms, including data retention

The goal isn’t to stifle innovation, but to balance enablement with control. If your employees are going to use AI, and they will, you must give them safe lanes to operate in.

Safe AI Use Starts With Culture

Technology alone won’t solve Shadow AI. Even with the best controls, employees may still turn to personal tools if corporate options are too slow, limited, or blocked.

That’s why organizations must train staff to think critically about AI use:

- What kind of data am I entering?

- Does this tool store inputs?

- Is there a safer, approved alternative?

Security must shift left — not just in DevOps, but in daily behaviour. Empowering users to ask “Is this a safe use of AI?” is more effective than locking everything down.

The Shadow Behind the Shadow: When AI Tools Are Built to Breach

Not all Shadow AI risks stem from innocent use. A more dangerous class of risk is emerging: AI tools designed with malicious intent — from compromised browser plugins to open-source models seeded with backdoors or data siphoning mechanisms.

These tools may appear helpful on the surface, but in the background, they:

· Exfiltrate data submitted by users

· Monitor keystrokes or clipboard content

· Send inputs to unauthorized third-party servers

· Modify outputs to subtly manipulate decision-making

· Quietly forward inputs to unauthorized servers, sometimes even as part of broader corporate espionage campaigns.

Unlike traditional malware, these tools exploit trust in AI itself, hiding in plain sight under the guise of productivity or automation. Without proper vetting, even a helpful-looking plugin or AI assistant can become a persistent insider threat.

This is why AI supply chain security and tool verification must become core components of Shadow AI governance — not just for what employees use, but for what those tools are actually doing behind the scenes.

An Overlooked Risk: Legal and Ethical Gaps

Recent headlines illustrate how blurry the lines have become. In July 2025, OpenAI CEO Sam Altman warned that there is no legal confidentiality when using ChatGPT as a therapist, a statement that raised red flags across healthcare, HR, and compliance sectors TechCrunch, 2025.

While this might sound like an edge case, it underscores a crucial reality: AI platforms are not bound by the same legal or ethical obligations as professionals, and using them inappropriately can open your business to reputational and legal risk, even if done unintentionally by an employee.

Conclusion: Shadow AI Is Not Malicious — But It Is Dangerous

Shadow AI isn’t malware. It’s not even misused AI in the traditional sense. It’s simply AI used without oversight, without boundaries, and without understanding. And in many organizations, that makes it more dangerous than any external threat actor.

The path forward isn’t fear — it’s governance. Build a framework, educate your people, secure your data, and embrace AI with control.

Because in the age of generative AI, the biggest threat might not come from the outside but from the trusted hands within.

Q&A: Shadow AI and Your Organization’s Risk

Q: Isn’t this just an IT issue? Why should I be concerned?

A: Shadow AI is not just a technical problem — it’s a business risk. It involves data governance, legal exposure, employee behavior, and third-party trust. IT may manage systems, but cybersecurity professionals are needed to identify, mitigate, and govern these risks strategically and holistically.

Q: We trust our employees — do we really need to monitor their AI use?

A: Trust is essential — but trust without visibility is risk. Most Shadow AI incidents are not malicious. They’re accidental. But even one well-meaning employee using an unvetted AI tool could leak customer data, proprietary code, or financial reports. It’s not about policing — it’s about protecting.

Q: Isn’t banning tools like ChatGPT enough?

A: Not really. Bans don’t stop usage — they just push it underground. What’s needed is a clear framework: which tools are approved, what data can be used, and what behavior is safe. That requires governance, not guesswork — and that’s where we come in.

Q: Can’t our IT team handle this?

A: Most IT teams are already stretched thin managing infrastructure, endpoints, and support. Shadow AI introduces legal, ethical, and data privacy layers that go beyond IT’s typical scope. What you need is a dedicated cybersecurity partner with the expertise to help you build safe, compliant, and AI-aware practices across your organization.

Q: What should we do right now?

A: Start by asking:

- Do we know which AI tools are being used internally?

- Have we defined what types of data are off-limits?

Do we have any AI usage policy in place? If the answer is “no” or “not sure,” it’s time to talk.

Educating employees on safe AI usage and providing clear guidance is one of the most effective ways to reduce Shadow AI risks. With the right governance and support, your team can use AI productively without compromising security.

We’re here to help you build the roadmap and protect what matters most.